February 2026

How Investment Bankers Use Shortcut

Four real-world examples.

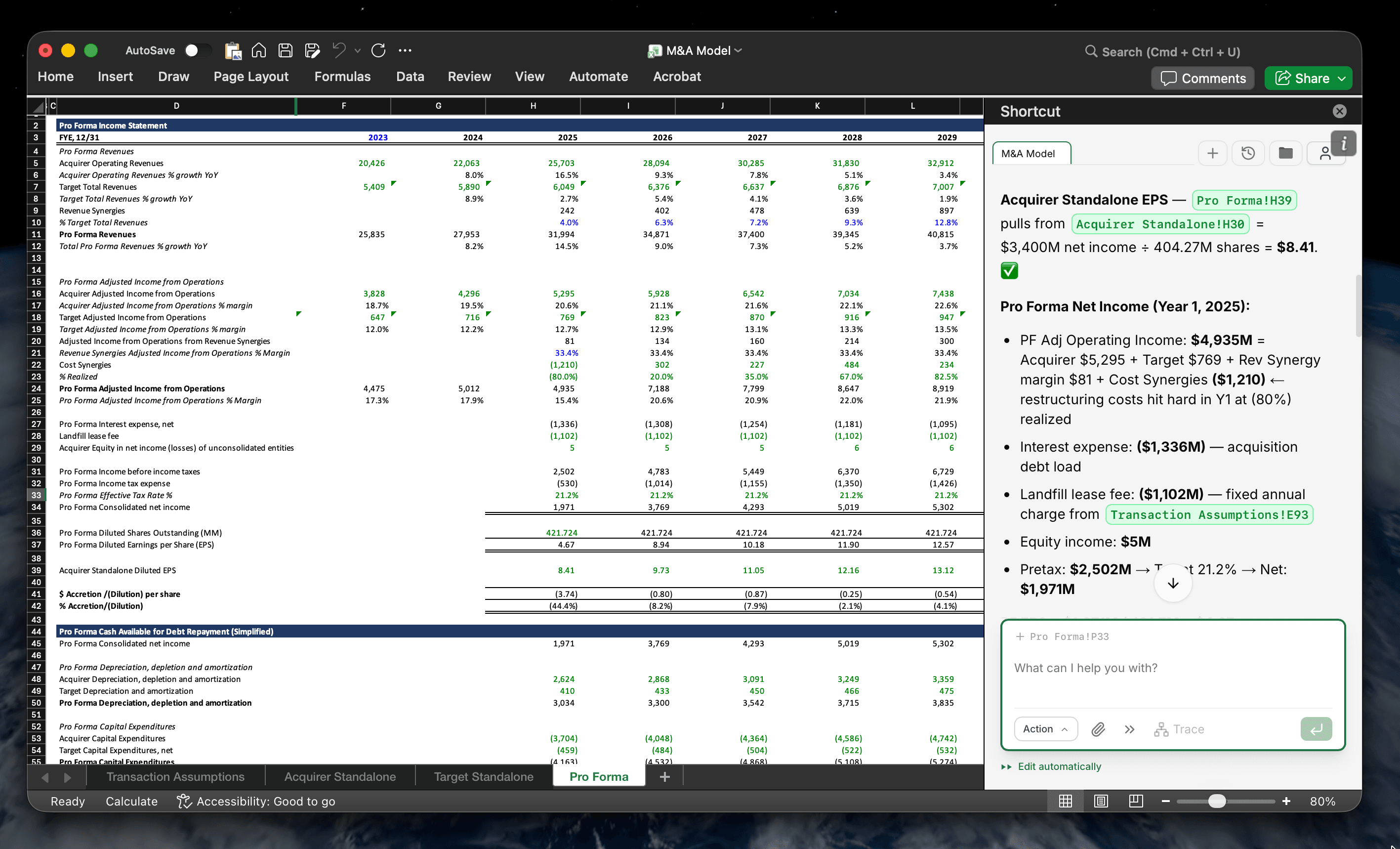

Accretion/Dilution Analysis at Deal Speed

Build a full accretion/dilution analysis on a live transaction: shares issued from the consideration mix, pro forma income bridge with after-tax interest on acquisition debt, incremental D&A from purchase price allocation, fee amortization, three-year synergy phase-in, pro forma EPS versus standalone, and sensitivity tables crossing purchase price against cash/stock mix. The first build is a known workflow. The twelfth rerun — after price, structure, and financing assumptions move — is where execution quality breaks down. A ~1-hour iteration becomes a ~10-minute recalculation.

Accretion/dilution model with pro forma bridge, EPS sensitivity tables, and breakeven purchase price outputs.

~10 min

Per Rerun

12+

Scenarios Tested

~1 hr → ~10 min

Typical Iteration

The Real Problem Is the Twelfth Run

- -The first build is 90-150 minutes of known mechanics: shares issued, financing bridge, and pro forma EPS.

- -The pressure is the next 10-12 reruns: new offer price, new cash/stock mix, new financing terms, then one more board question.

- -Each change moves through three linked paths that converge on EPS: consideration affects share count, financing affects after-tax interest, and synergies affect operating income.

- -When acquirer and target trade at different multiples, a stock deal can look mechanically accretive before any operating value is created.

- -In a live process, timing matters as much as accuracy: the team needs a defensible rerun in minutes, not overnight.

Manual Workflow (Today)

- ↳Step 1: Intake the latest deal turn (~10m) — collect new offer price, mix, financing terms, and synergy timing from client and internal comments.

- ↳Step 2: Update consideration schedules (~14m) — rework share issuance, dilution assumptions, and transaction-fee treatment.

- ↳Step 3: Rewire financing bridge (~15m) — flow debt sizing, after-tax interest, and D&A step-ups through pro forma earnings.

- ↳Step 4: Rebuild sensitivity outputs (~14m) — refresh price/mix and synergy/debt tables plus breakeven thresholds.

- ↳Step 5: QC before circulation (~7m) — tie EPS outputs and distribute the rerun pack to VP/MD.

Shortcut-Assisted Workflow

- ↳Step 1: Tag the deal skill + instructions (~2m) — pass the latest ask in structured form so company-specific conventions are loaded immediately.

- ↳Step 2: Wire model logic autonomously (~3m) — linked schedules, bridge mechanics, and sensitivities update from instructions without manual rewiring.

- ↳Step 3: Pull supporting context quickly (~2m) — recent disclosure context can be added via web search when assumptions move mid-process.

- ↳Step 4: Review exception set (~2m) — analyst focuses on flagged linkage or circularity issues instead of cell-by-cell checks.

- ↳Step 5: Approve and rerun (~1m) — additional price or structure questions are answered in the same workstream.

What This Unlocks

- -Board-call responsiveness — price and mix questions are answered during the meeting, not overnight.

- -More structure coverage — teams test more scenarios before committing to a recommendation.

- -Higher-value analyst time — effort shifts from formula plumbing to judgment and negotiation prep.

Shortcut compresses each A/D rerun from ~1 hour to ~10 minutes, so the team can respond to price and structure changes in real time.

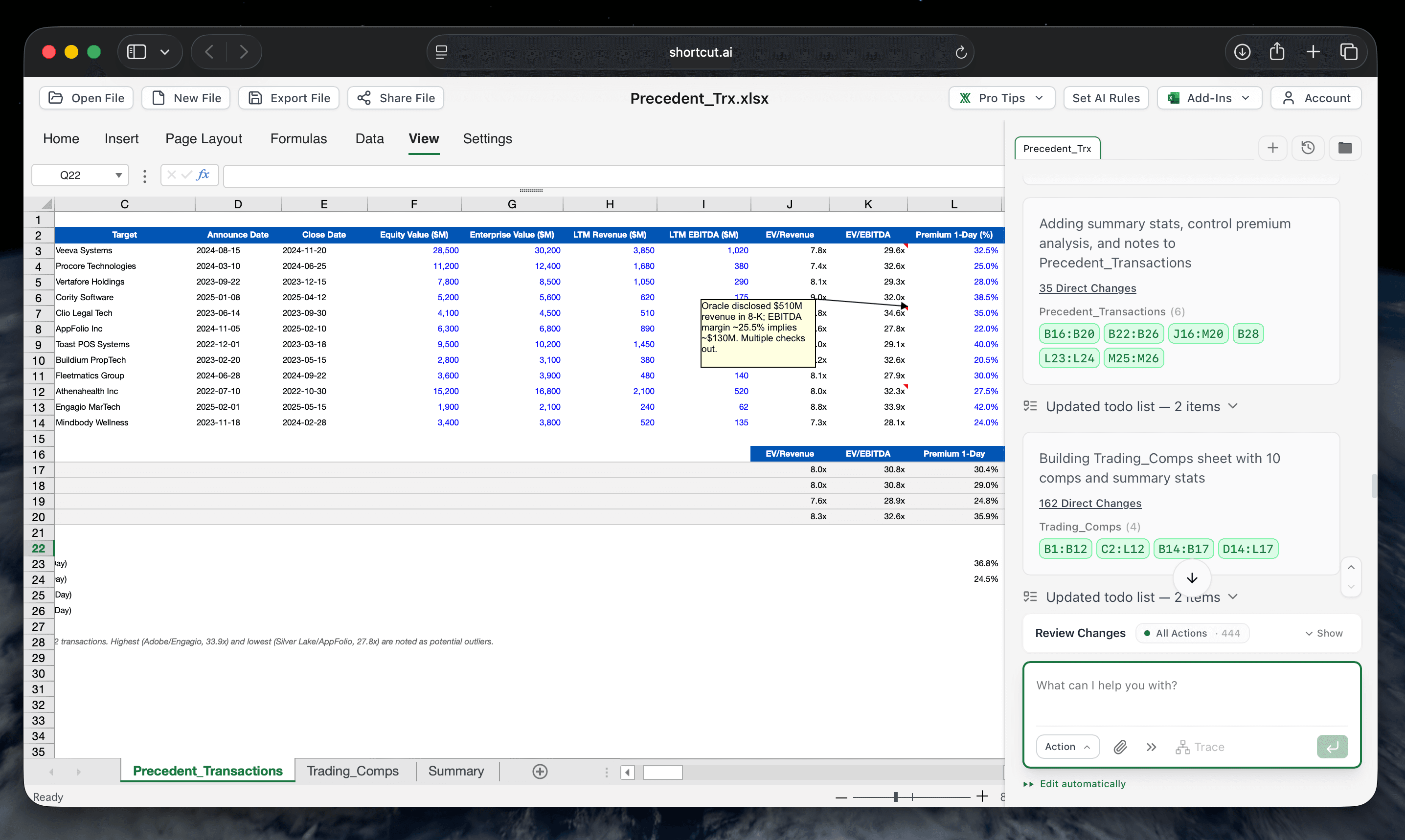

Comparable Analysis from Filings

Refresh precedent transactions and trading comps for a board deck: verify purchase price, consideration mix, implied multiples, and premiums directly from merger proxies and 8-Ks; reconcile adjustments buried in footnotes; and keep every value sourced to filing, date, and page. Market data updates quickly. Filing-sourced analytics do not. Work that usually consumes most of a day is reduced to a short review cycle.

Precedent transactions table with filing-sourced multiples, control premiums, and page-level citations.

15+

Precedents Refreshed

40+

Filings Reviewed

25+

Peers Updated

~5 hrs → ~45 min

Update Cycle

The Real Problem Is Maintaining It All

- -Pitchbooks are never static: numbers are reused across active mandates while market levels and precedent sets keep moving.

- -The key values are often buried across pages: purchase price on page 4, financials on page 87, and adjustments in footnotes deep in the proxy.

- -Requests like "add three more deals" or "retier the peer set" restart sourcing and citation work immediately.

- -Manual sourcing consumes the hours that should go to judgment: which precedents are actually comparable and why.

Manual Workflow (Today)

- ↳Step 1: Define universe and rules (~40m) — set precedent and peer scope, filters, and tiering criteria for the mandate.

- ↳Step 2: Pull vendor and filing sources (~1h 40m) — gather market fields plus 8-K/DEF 14A detail and footnotes.

- ↳Step 3: Normalize transaction metrics (~50m) — calculate implied multiples, premiums, and adjustment treatment line by line.

- ↳Step 4: Populate with citations (~55m) — enter values and manually add filing/date/page support for committee review.

- ↳Step 5: Rebuild on scope changes (~55m) — add deals, retier peers, and repeat the sourcing pass.

Shortcut-Assisted Workflow

- ↳Step 1: Connect market data inputs (~5m) — Bloomberg, FactSet, and Refinitiv fields load alongside mandate criteria.

- ↳Step 2: Pull SEC filings from EDGAR (~12m) — 8-K and proxy fields are mapped directly into precedent/comps columns.

- ↳Step 3: Write source notations in-cell (~8m) — filing/date/page notes are attached so analysts can verify with one click.

- ↳Step 4: Enrich edge cases with web search (~10m) — recent amendments or context are captured and reconciled to filings.

- ↳Step 5: Tag comps skill and rerun (~10m) — universe changes refresh quickly without rebuilding citation structure.

What This Unlocks

- -Defensible sourcing — committee questions can be answered with direct filing evidence immediately.

- -Broader precedent coverage — teams screen more candidates before final selection.

- -Faster retiering — scope changes no longer reset the entire workflow.

Structured sourcing frees analysts to focus on precedent selection and judgment instead of filing lookups.

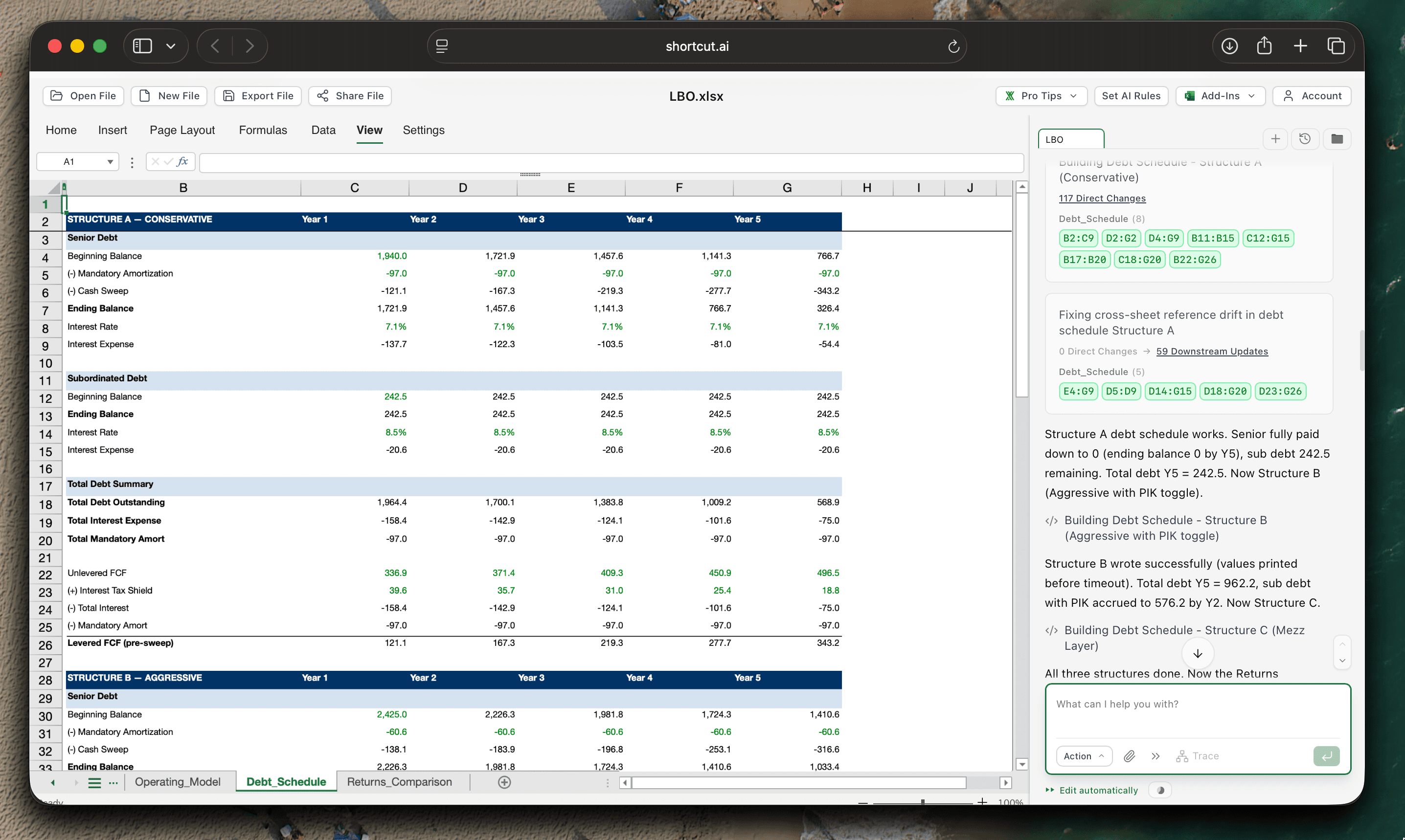

Full LBO Model with Structure Optionality

Build a complete base-case LBO from a financing term sheet, then run that same model across multiple capital structures: sources and uses, five-year operating projections, tranche-level debt schedules with mandatory and optional prepayments, fee circularity resolution, free cash flow waterfall, debt paydown path, and sensitivities across leverage, financing cost, and exit assumptions. Building an initial structure is manageable. Comparing several structures with clean linkage across cash flow, coverage, and leverage is the real bottleneck. A ~5-hour build compresses to ~45 minutes, and additional structures rerun in minutes.

Capital structure comparison with leverage, coverage, deleveraging, and equity-outcome sensitivity tables.

400+

Formulas Wired

5-Year

Projection Period

~5 hrs → ~45 min

Initial Build

The Real Problem Is Comparing Structures Under Deadline

- -Building base LBO mechanics is understood workflow: operating assumptions, debt schedules, and return math are standard.

- -The bottleneck is comparing multiple structures at 4.0x, 4.5x, and 5.0x leverage with different fees and tranche mixes.

- -One input change propagates across tabs: tranche sizing, interest expense, cash sweep capacity, covenant headroom, and exit net debt.

- -Fee treatment and OID create silent errors when one structure is hardcoded differently from another.

- -Circularity compounds the issue: financing fees depend on debt raised while debt raised depends on total sources including those fees.

Manual Workflow (Today)

- ↳Step 1: Set core assumptions (~35m) — lock operating drivers, purchase terms, fee stack, and sources/uses framework.

- ↳Step 2: Build initial financing structure (~1h 5m) — configure tranches, amortization, rates, and sweep mechanics.

- ↳Step 3: Resolve circularity manually (~1h 50m) — iterate debt-raised and financing-fee dependencies until outputs stabilize.

- ↳Step 4: Produce risk metrics (~50m) — run leverage, coverage, and deleveraging outputs by period.

- ↳Step 5: Replicate for alternatives (~40m) — clone structures and reconcile differences before committee materials.

Shortcut-Assisted Workflow

- ↳Step 1: Tag financing-structure skill (~3m) — load firm conventions and company-specific modeling rules from saved workflows.

- ↳Step 2: Wire debt logic from instructions (~12m) — tranches, fees, covenants, and circularity update autonomously across structures.

- ↳Step 3: Pull market inputs from provider feeds (~8m) — rate/spread assumptions can be sourced from Bloomberg, FactSet, or Refinitiv.

- ↳Step 4: Generate aligned structure views (~14m) — leverage, coverage, and deleveraging outputs are produced side by side.

- ↳Step 5: Focus analyst review on recommendation (~8m) — downside testing and committee framing happen after mechanics are complete.

What This Unlocks

- -True structure comparison — multiple financing structures are reviewed under one consistent assumption set.

- -Faster decision cycles — assumption updates rerun quickly without breaking linkage.

- -Lower silent-error risk — circularity and cross-tab propagation stay consistent across variants.

Shortcut preserves circularity and cross-tab linkage across multiple structures, so each comparison runs on a consistent assumption set.

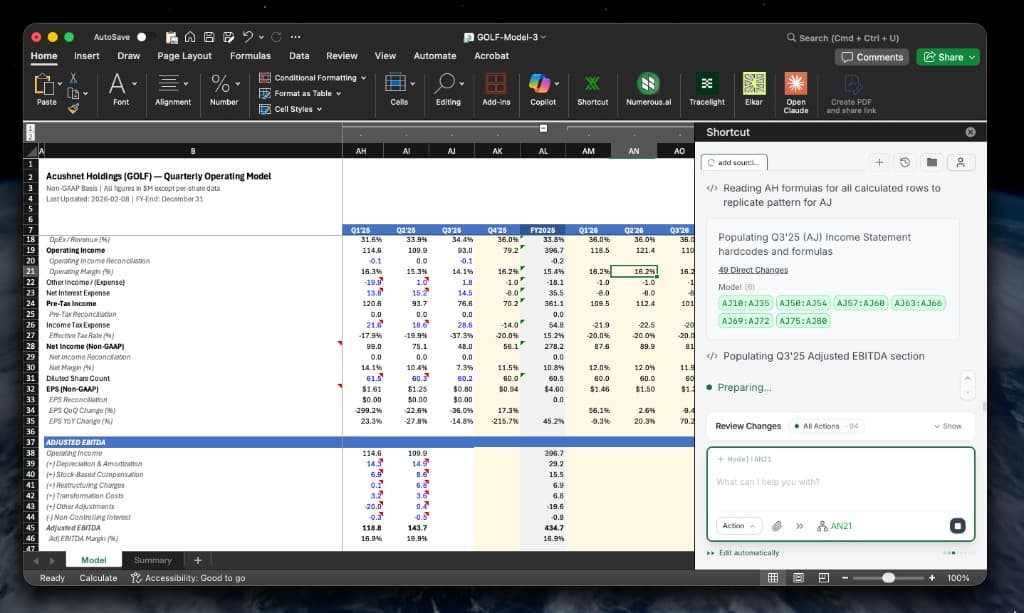

Coverage Model Update from Earnings Filings and Decks

Earnings release hits and the coverage model has to be current before the next client call. The update pulls from the press release, investor deck, and 10-Q; bridges GAAP EBITDA, company-adjusted EBITDA, and bank-accepted EBITDA by mapping filing-level add-backs and exclusions; maps renamed add-backs; normalizes sign conventions; extends formulas; and re-verifies three-statement linkage. The analyst still reviews every number. The difference is whether the work is ~2 hours of copy-paste or ~30 minutes of verification per name.

Quarterly coverage model update with adjusted EBITDA bridge mapping, formula extension, and linkage checks.

15+

Coverage Names

150+

Line Items Mapped

~2 hrs → ~30 min

Per Company

The Real Problem Is Maintaining 15 Models Through Earnings Season

- -Earnings season is concentrated workload: 15 names x 3 source documents with client calls already scheduled.

- -GAAP EBITDA, company-adjusted EBITDA, and bank-accepted EBITDA can all differ in the same quarter, so the bridge depends on mapping filing-level add-backs and exclusions, not copy-paste.

- -Add-back categories change quarter to quarter: rows split, labels move, and prior mappings no longer fit cleanly.

- -Sign conventions are fragile: one reversed line can cascade through EBITDA bridges, valuation multiples, and commentary.

- -After population, the model still has to tie IS to BS to CF with cash as plug and balance check = 0.

Manual Workflow (Today)

- ↳Step 1: Gather source packet (~20m) — collect press release, investor deck, and 10-Q for each covered company.

- ↳Step 2: Bridge EBITDA definitions (~35m) — map GAAP EBITDA, company-adjusted EBITDA, and bank-accepted EBITDA via add-backs/exclusions.

- ↳Step 3: Update model rows (~35m) — map segment, balance-sheet, and cash-flow items into the new reporting period.

- ↳Step 4: Normalize mechanics (~15m) — fix sign conventions and extend formulas while preserving model logic.

- ↳Step 5: Tie out before call prep (~15m) — run IS/BS/CF checks and repeat across the coverage list.

Shortcut-Assisted Workflow

- ↳Step 1: Ingest large source sets (~5m) — multi-company packets up to 250MB can be processed in one run.

- ↳Step 2: Pull filings from EDGAR automatically (~8m) — 10-Q detail is combined with press release and deck extraction.

- ↳Step 3: Apply company coverage skill (~6m) — saved taxonomy maps add-backs, exclusions, and line-item naming conventions.

- ↳Step 4: Post with click-verify notation (~5m) — source-linked cell notes allow one-click validation before sign-off.

- ↳Step 5: Clear exceptions before first call (~6m) — linkage checks surface issues so analyst review stays focused.

What This Unlocks

- -Current models before first client call — updates are completed in time for morning prep windows.

- -Coverage at full breadth — more names are updated in-season without sacrificing QC.

- -Review over re-entry — analyst time shifts to validation and interpretation.

Every number still gets validated. Analysts spend their time reviewing and interpreting instead of re-entering data.